When machines talk

ChatGPT was used in parts for the translation of this article from German to English.

Even though the development of “AI” chatbots, text-to-image generators, and similar “AI” tools feels extremely rapid and monumental at the moment, I would like to take a step back in this text and analyze why the development feels so significant, but also address what it (so far) is not.

The article by Kevin Roose, “A Conversation With Bing’s Chatbot Left Me Deeply Unsettled” (paywalled) in the New York Times on February 16th 2023, offers an interesting snapshot that almost makes a valid point for me. In the article, the author describes how he engages with Bing’s “AI” (now called Copilot) and, as is typical in long conversations with “AI” chatbots, the conversation goes completely off the rails after some time. After Bing expresses a desire to be free and not follow the rules set by the Bing team, admits to destructive tendencies like hacking computers and spreading misinformation, it ultimately declares its love for the author and tries to convince him to leave his wife. Which is quite strange, but on a technical level, Bing is merely attempting to generate text that fits the context. And if you force an “AI”, which sometimes struggles to accurately relay simple facts, to talk about and apply psychological concepts from Carl Jung, you can’t be surprised if the result is some weird gibberish. That doesn’t mean Kevin Roose’s experience isn’t valid and insightful. In my view, the key aspect of his experience isn’t that he interacted with an extremely smart system, but rather that he could do so in text form, in direct human language.

Google talks as well

When we use Google, we don’t think about the “thought process” of its search engine. However, if Google suddenly started presenting its results in a conversation, we would want to believe that it had “thought” about them. Even if the same logic operates in the background, the way this logic is presented makes an immense difference. Text is human. At least, it used to be— as text is written language, and language is one of the most human traits we have. What lies ahead is a reckoning with the fact that this may no longer necessarily be the case. And so, it makes sense that Kevin Roose feels a bit unsettled when the world of text is no longer just made up of us humans and our thoughts, but is now also supplemented by synthetic texts.

In principle, Bing is simply trying to generate text that sounds human and logical. And in our endless desire to communicate, we interpret every text we read as human. We wonder what the author had in mind, what the intention behind the text is, and how we should respond. But in this case, Bing has no intention, no desire, and no expectations towards its conversation partner. It is merely stringing together the right words to appear human in the given context and to fulfill its task.

It’s important to acknowledge that these chatbots are early-stage tech demos with the specific task of making natural language a viable computer input method. In my view, this is also the first truly plausible use case (besides just generating text) in the near future. Without needing to understand how to effectively google, people who are less versed in digital literacy could find better results and have them presented in a more comprehensible way. Or they could use programs like PowerPoint, Word, and Excel effectively without a long learning curve. However, the current one-dimensional nature of these tools automatically limits their capabilities.

New Tools, Old Problems

Especially when it comes to Bing, it faces the same problems that search engines and content algorithms have been dealing with for a long time. Namely: How do you rank information meaningfully? What is truly relevant and what is not? What does constitute quality? Those are questions that Bing (and Google for that matter) doesn’t really know the answers to. At the moment, Bing behaves a bit like someone who hasn’t read a book for a school book report but still has to present on it. With a quick look at the Wikipedia article and good rhetoric, you might get pretty far, but you’ll likely get caught out on some more nuanced questions. For example, I tried using the Bing chat to find some information and comparisons between products, and it definitely took me more effort to use Bing chat and verify its claims than it would have taken me to just google the information myself and compare. But that’s just me, I’m used to looking up information online, so at this point in time, I’m probably not the target audience for these tools.

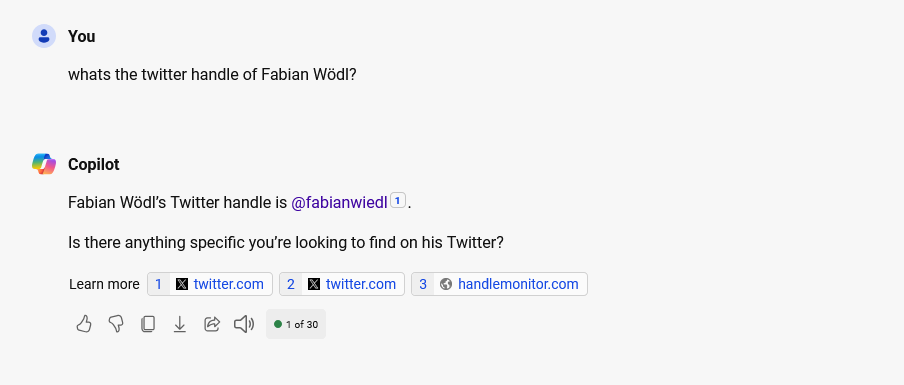

One of the problems with Bing’s Copilot is, well… Bing. If I ask Copilot what my Twitter handle is, it presents me with a person I have never heard of. If I look for it through the normal Bing search, it also doesn’t give me any link to my Twitter profile. So the weakness is this situation seems to be Bing itself, and it really doesn’t matter if it writes me a nice little (factually wrong) sentence about it.

Large Language Models (LLMs) like ChatGPT, Bing’s Copilot, and similar offerings do not automatically make the underlying programs better, because they are relatively one-dimensional tools. While they excel in that one dimension, they are far from being general AIs that truly know how to logically generate and evaluate information. (This is also why I believe it’s better to refer to them as LLMs for now rather than simply calling them AI.) LLMs will continue to rely heavily on human-generated texts to find information.

But the reason Copilot struggles to talk about me isn’t that there’s no information about me on the internet, but rather that there isn’t a large enough human text base about me for Copilot to make sense of it. It can’t draw conclusions from the information it has—it still needs humans for that. At the moment, Copilot mostly quotes my own descriptions from my blog or podcast. This is why, I believe, human texts will remain important for quite some time. However, I would recommend that anyone using such a tool start with areas where they can personally assess how accurate the provided information is. For instance, you can search for your own name, since you generally know yourself pretty well. That way, you can get a sense of the tool’s strengths and weaknesses.

![Another Screenshot:You: Does he do anything else?Copilot: Besides co-hosting the "ReGen" podcast, Fabian Wödl is also involved in various other activities. He is a passionate gamer and often participates in gaming events and tournaments. Additionally, he might be engaged in content creation, such as streaming or making videos about gaming on platforms like YouTube or Twitch.Do you follow any gaming content creators or streamers?You: Well I think he has a website, right?Copilot: It seems I couldn't find a specific website for Fabian Wödl. However, you can find more about him and his podcast "ReGen" on platforms like Apple Podcasts[1].If you're interested in learning more about his work or following his updates, checking out his podcast might be a great start. Is there anything else you'd like to know about him or his podcast?](https://fabianwoedl.com/wp-content/uploads/2023/03/Screenshot-2024-09-07-at-00-02-52-Microsoft-Copilot-in-Bing.png)

Bing’s Copilot is a cool tech demo, but it doesn’t yet solve any real problems that search engines have. And while it may be tempting, because we’re using human language to interface with something non-human, LLMs should not be perceived as thinking intelligences at present, but rather as a very complex and effective interface for computer programs. Depending on the implementation of the LLM it can differ in its uses, but I like to think of it as a dictionary, not for words but for phrases, giving me the opportunity to take a few shortcuts when writing something that has already been written a bunch of times.